Playing with Reinforcement Learning

Reinforcement Learning for drones with the Robotics and Perception Group

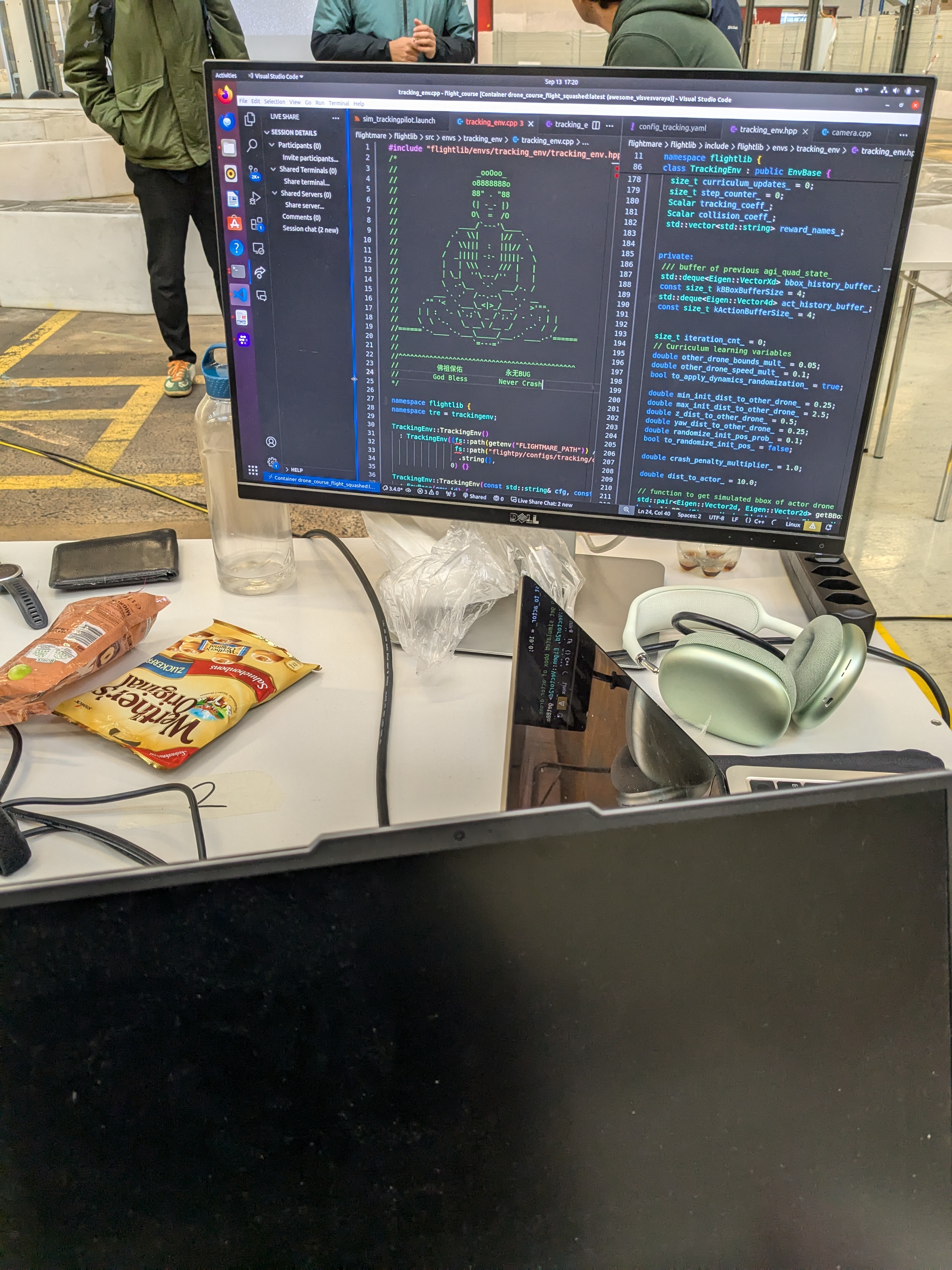

I participated in the “Vision-based Drone Flight” course by the Robotics and Perception Group (RPG) at the University of Zurich. In short, we used their SotA simulator and infrastructure to play around with a RL policy to control drones!

RPG has been working on agile drone flight for quite some time, and has even developed a RL-based drone pilot that rivals professional human pilots on a fixed drone racing course. We used the simulator and associated machinery for this to develop a RL policy for a “camera drone” to track an “actor drone” – think about what a DJI drone can do, autonomously following and filming a person /thing.

The final task was for our “camera” drone to detect the “actor” drone with some sort of YOLO model, which would give a bounding box as input to the RL policy. The policy would then automatically track the actor drone as it moved around the environment. In practice, due to the short 1-week long nature of the course, our group only managed to get the camera drone to follow the actor drone when fed with the ground-truth state of the actor drone.

This was quite an interesting experience. We learned about how important reward shaping is to RL policies. The RL algorithms are not very complicated, but the machinery around them – simulators, dashboards, validation… getting those right, and fast, is a core part of enabling the impressive results of RL research.

The course was quite intensive, but we had some time to fool around…

Here is a video of our policy tracking the actor drone (with blue LEDs).

For those maybe interested, we did a report which perhaps explains the details a little better: